Docker

Docker is an open platform for shipping, developing, and running applications inside containers. Docker makes it easier to create, distribute, and run applications by using containerization technology. Docker allows you to isolate your applications from your infrastructure so you can ship software quickly.

It packages applications into containers, which are lightweight and include only essential parts. This ensures applications run consistently across different computers or systems. As a result, it makes software deployment easier, more efficient, and more flexible.

Docker core concepts

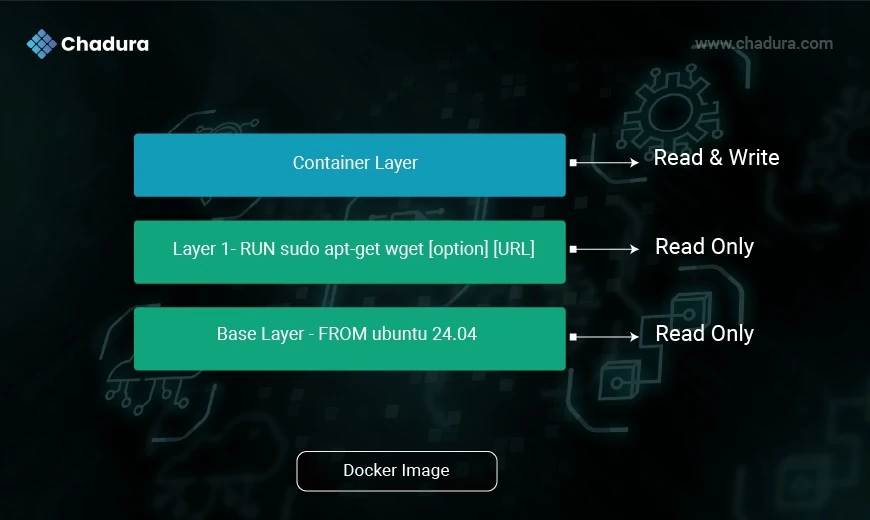

1. Docker Images: Imagine these as blueprints for building applications. A Docker image is a compact and self-contained package. It has everything necessary to run the software, such as the code, the environment it needs to operate, the libraries it uses, and configuration files. Once a Docker image is created, it remains unchanged and cannot be altered.

2. Docker Container : A container is an isolated environment that runs an application and includes everything it needs to operate—code, runtime, system tools, libraries, and settings. Containers share the host OS kernel, which makes them fast and lightweight.

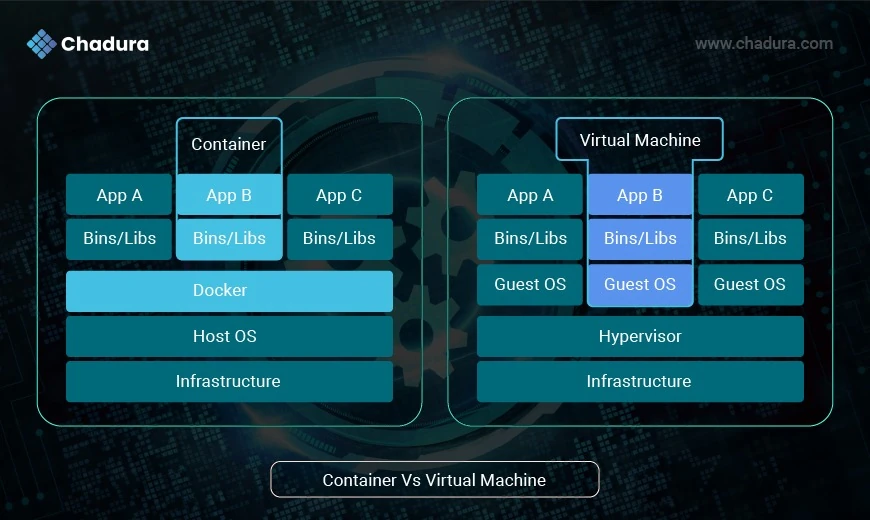

Docker Container Vs VM

- Virtual Machines are often slow and take a long time to start/ Boot.

- In contrast, containers are quicker because they use the existing host operating system and share necessary libraries.

- This means containers don’t waste or block the resources of the host system. They have their own separate libraries and binaries just for the applications they're running.

- Containers are managed by something called a Containerisation engine. Docker is an example of a platform you can use to make and run containers.

3. Docker Files

Dockerfiles are like recipes for creating Docker images. Think of a Dockerfile as a simple list of instructions written in a plain text file. This list shows step-by-step commands telling Docker how to build an image. By using Dockerfiles, you make the entire image creation process simpler and more reliable. It ensures every time you create an image, it will be exactly the same, with no changes or surprises. This consistency is important, especially when you want to reproduce the same setup multiple times.

4. Docker Hub / Registry:

It is a cloud service by Docker that lets you share your Docker images with others. You can access images shared by developers and organizations too. The collection of images on Docker Hub is large, contributed by Docker and its user community. This helps you easily share images and manage their different versions.

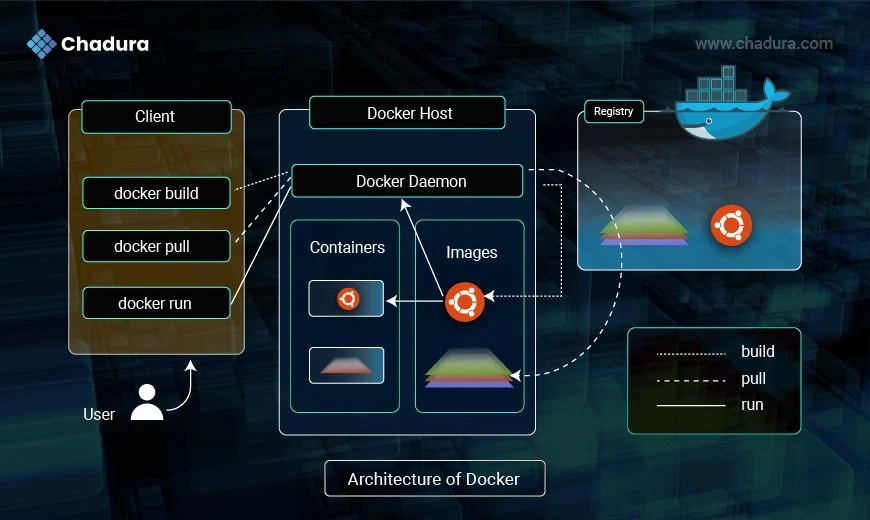

Architecture of Docker

The working process of Docker involves seamless interaction between the Docker Client, Docker Host, and Docker Registry. The Docker Client is the user-facing interface where commands like docker build, docker run, and docker push are issued. These commands are sent to the Docker Host, which runs the Docker Daemon responsible for executing them handling tasks such as building images, running containers, and managing resources. When building an image, the Docker Host can pull base images from a Docker Registry, which serves as a centralized storage for Docker images. The Registry can be public, like Docker Hub, or private for internal use. Once an image is built, it can be pushed to the Registry for sharing or deployment. This client-host-registry workflow enables efficient and portable application development and deployment across different environments.

Docker Client

Docker Build: When using Docker, a person can make a new image by using a Dockerfile. This special file, the Dockerfile, is saved on their computer. To create the new image, they use the "docker build" command. This command takes all the necessary information and sends it to the central part of Docker called the Docker daemon. The Docker daemon then uses this information to make the image.

Docker pull : The user can also pull an image from a registry to their local system. This is commonly used to download pre-built images.

Docker Run : The "docker run" command lets you start a container from an image. If you want to use an application or service, you give this command to begin.

Docker Host : Docker Image : This is where Docker stores images on your computer. When you download an image from the registry or create one yourself, it gets saved here in local storage.

Running Containers

The image displays several containers actively running on a Docker host. Each of these containers is a separate instance of the images and operates independently. They have their own environments and resources.

Docker Registry

The Docker daemon communicates with a registry to download images when you run the docker pull command. It also communicates with the registry to upload new images you've created on your local computer if you need to share them with others or keep them stored in a remote location.

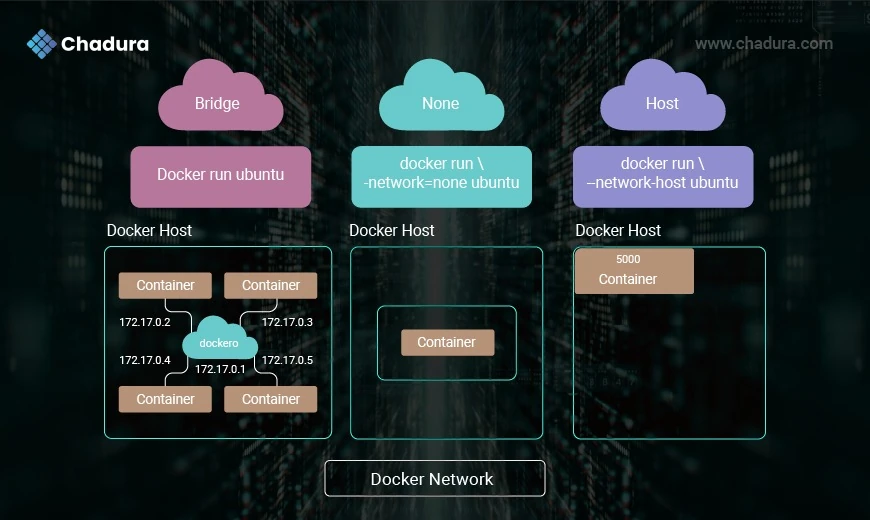

Types of Docker Networks

Bridge (default) Network :

- Used for standalone containers.

Each container gets its own IP, and they can talk to each other on the same bridge.

Example: docker run --network bridge

Host Network

- Shares the host’s network stack.

- No isolation; the container uses the host’s IP address.

- Useful for performance or access to host services.

Example: docker run --network host

None Network

- Disables networking.

- The container is isolated from all networks.

Example: docker run --network none

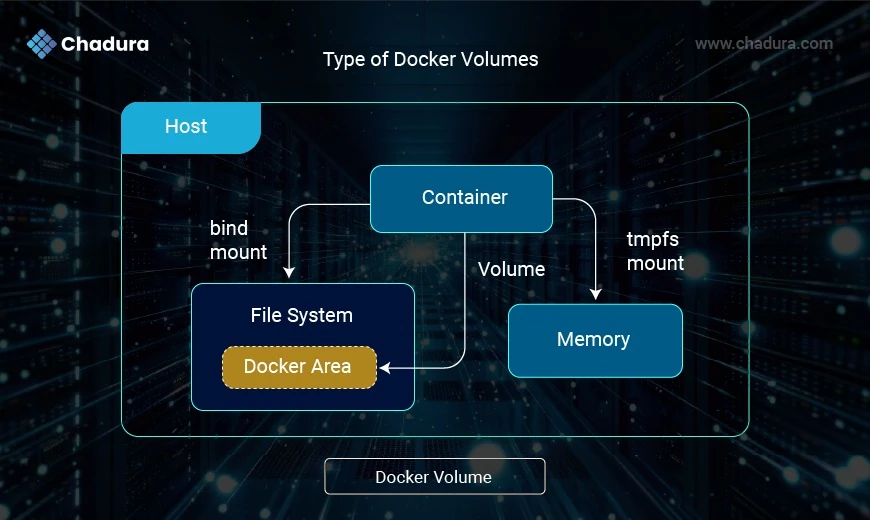

Docker Volume

Docker provides different storage options to persist and manage data. These include bind mounts, volumes, and tmpfs mounts — each serving different use cases.

Bind mount

Description: Maps a specific path on the host machine to a path inside the container.

Use Case: Useful during development when you want the container to reflect changes made on the host in real-time.

Pros:

- Full control over the host directory

- Real-time file updates

Cons:

- Platform-dependent

- No management via Docker CLI

- Less secure and portable

Volume

Description: Managed by Docker, volumes are stored under Docker’s internal storage path (e.g., /var/lib/docker/volumes/).

Use Case: Ideal for production; cleaner separation of data and application logic.

Pros:

- Portable, secure, and easy to back up

- Managed via Docker CLI

Cons:

- Less visibility on the host unless inspected manually

Tmpfs mount

Description: Mounts a temporary filesystem in the container’s memory (RAM), not stored on disk.

Use Case: Ideal for storing sensitive data (e.g., secrets, temporary files) that shouldn’t persist or touch disk.

Pros:

- Fast (memory-based)

- Data never touches disk

Cons:

- Data lost when the container stops

- Limited by available system memory

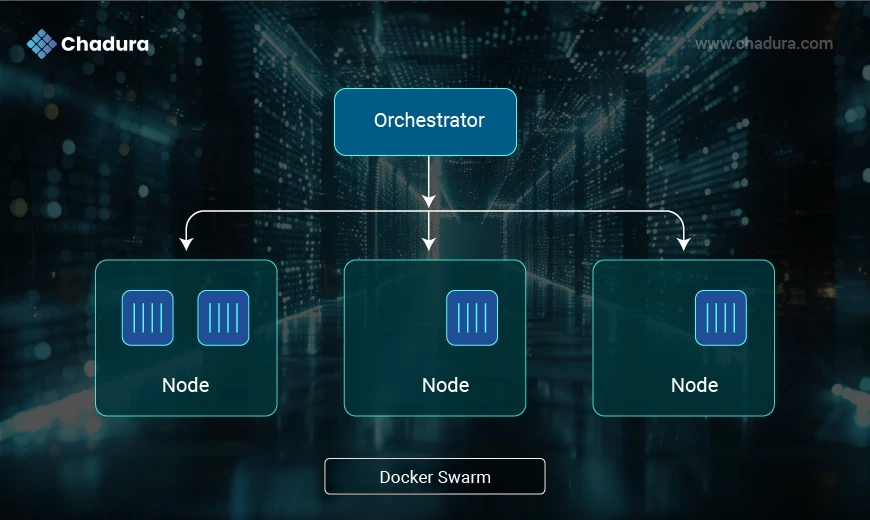

Docker Swarm

Docker Swarm is Docker's native clustering and orchestration tool that allows you to manage a group of Docker hosts (nodes) as a single virtual system. It enables container orchestration, meaning you can deploy, scale, and manage multi-container applications across multiple machines.

Swarm

- A cluster of Docker nodes (machines running Docker).

- Managed by Swarm Mode

Node

A single Docker engine instance in the swarm.

Two types:

Manager Node: Manages the cluster and schedules tasks.

Worker Node: Executes tasks assigned by the manager.

Services

- A description of a task (like a container).

- You define the image, ports, number of replicas, etc.

Task

- A single container running as part of a service.

Overlay Network

- Allows services to communicate across multiple Docker hosts securely.

Benefits of Docker Swarm

- Easy setup (built into Docker)

- High availability (via manager quorum)

- Rolling updates & rollbacks

- Load balancing across nodes

- Secure by default (TLS encryption between nodes)

Tools Related to Docker Swarm & Container Orchestration

Orchestration Platforms

- Docker Swarm – Docker’s built-in clustering and orchestration tool.

- Kubernetes – Industry-standard container orchestration system.

- OpenShift – Enterprise-grade Kubernetes platform by Red Hat.

- Rancher – Kubernetes cluster management platform (previously supported Docker Swarm too).

- Nomad (by HashiCorp) – Lightweight orchestrator for Docker and other workloads.

- Mirantis Kubernetes Engine – Formerly Docker Enterprise; supports both Swarm and Kubernetes.

- Mesosphere (DC/OS) -Data center OS built on Apache Mesos:supports container orchestration

Lightweight Kubernetes Alternatives

- K3s – Lightweight Kubernetes distribution optimized for IoT and edge devices.

- MicroK8s – Minimal, snap-based Kubernetes by Canonical.

- Minikube – Local single-node Kubernetes cluster for testing and development.

Management & GUI Tools

- Portainer – Easy-to-use UI for managing Docker, Swarm, and Kubernetes clusters.

- Lens – Kubernetes IDE for managing and visualizing clusters.

- K9s – Terminal-based UI to interact with Kubernetes clusters.

- Octant – Open-source Kubernetes dashboard for developers.

Networking & Load Balancing

- Traefik – Dynamic reverse proxy and load balancer for Docker Swarm & Kubernetes.

- HAProxy – High-performance TCP/HTTP load balancer often used with Docker services.

- Cilium – Advanced networking and security plugin for Kubernetes.

CI/CD & DevOps Tools

- Jenkins X – CI/CD for Kubernetes with GitOps.

- Argo CD – Declarative GitOps continuous delivery tool for Kubernetes.

- Flux – GitOps operator for Kubernetes.

Benefits of Docker

Portability

Docker containers can run on any system that supports Docker. Whether it's on a developer's laptop, an on-premises server, or through a cloud service, Docker makes it simple to transfer applications between different environments without hassle.

Consistency

Using containers solves the common issue of "it works on my machine." They ensure that the environment is the same from the development phase all the way to production, reducing unforeseen problems when transitioning between stages.

Efficiency

Docker containers are designed to be lightweight and fast, especially compared to traditional virtual machines. They make better use of systemFtypes resources and can start up almost instantly, which is a big advantage.

Scalability

Docker works efficiently with orchestration tools like Kubernetes and Docker Swarm. These tools make it easy to expand applications by distributing workload across multiple systems, helping applications grow smoothly as demand increases.

Real-World Use Cases

- Microservices Architecture: Docker allows each service to be packaged and deployed independently.

- CI/CD Pipelines: Integrating Docker into CI/CD tools ensures consistent builds and faster deployments.

- Testing Environments: Easily spin up multiple testing environments with different configurations.

- Legacy App Modernization: Encapsulate legacy apps into containers without rewriting code.

Conclusion

Docker goes beyond being just a tool.It changes the way we create and run applications. It is fast, flexible, and efficient, enabling teams to build and manage software effectively. By using Docker, developers and operations teams can concentrate more on making excellent software and worry less about the technical details of the systems they use.